1️⃣ Introduction

In AI systems that retrieve or generate information, ranking quality and relevance are critical. Whether you are building a RAG-based assistant, a knowledge-driven chatbot, or a classical search engine, users expect the most accurate, contextually appropriate, and useful answers to appear first.

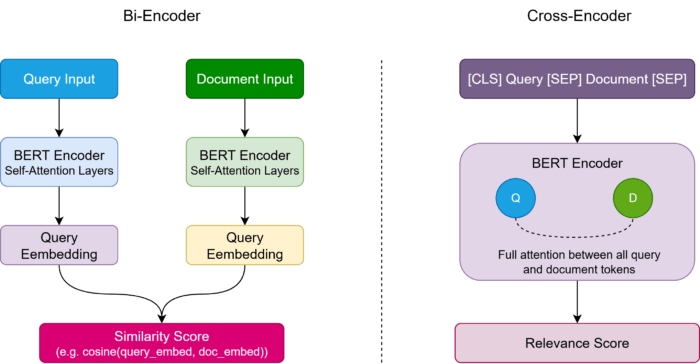

Traditional retrieval methods, such as keyword-based search (BM25) or bi-encoder embeddings, can capture some relevant results but often miss subtle relationships, domain-specific phrasing, or nuanced context cues. Cross-encoders address this gap by jointly encoding query–document pairs, allowing token-level interactions that improve precision, contextual understanding, and alignment with human judgment.

They are particularly valuable when accuracy is paramount, for instance:

- Re-ranking candidate documents in large retrieval pipelines

- Selecting the most relevant context for RAG-based assistants

- Handling domain-specific queries in healthcare, legal, or technical applications

What You Will Learn

- How cross-encoders work and why they outperform BM25 or bi-encoders.

- How to construct query–document inputs and perform joint transformer encoding.

- How to score relevance using the

[CLS]embedding via a linear layer or MLP. - How to implement cross-encoder scoring and re-ranking in Python.

- How to combine fast retrieval methods (BM25/bi-encoders) with cross-encoder re-ranking.

- Examples of real-world applications.

This article will guide you through the inner workings, practical implementations, and best practices for cross-encoders, giving you a solid foundation to integrate them effectively into both retrieval and generation pipelines.

2️⃣ What Are Cross-Encoders?

A cross-encoder is a transformer model that takes a query and a document (or passage) together as input and produces a relevance score.

Unlike bi-encoders, which encode queries and documents independently and rely on vector similarity, cross-encoders allow full cross-attention between the query and document tokens. This enables the model to:

- Capture subtle semantic nuances

- Understand negations, comparisons, or cause-effect relationships

- Rank answers more accurately in both retrieval and generation settings

Input format example:

[CLS] Query Text [SEP] Document Text [SEP]The [CLS] token embedding is passed through a classification or regression head to compute the relevance score.

3️⃣ Why Cross-Encoders Matter in Both RAG and Classical Search

✅ Advantages:

- Precision & Context Awareness: Capture nuanced relationships between query and content.

- Alignment with Human Judgment: Produces results that feel natural and accurate to users.

- Domain Adaptation: Fine-tunable for any domain (legal, medical, technical, environmental).

⚠️ Trade-offs:

- Computationally expensive since each query–document pair is processed jointly.

- Not ideal for very large-scale retrieval on its own — best used as a re-ranker after a fast retrieval stage (BM25, bi-encoder, or other dense retrieval).

4️⃣ How Cross-Encoders Work

Step 1: Input Construction

A query and a candidate document are combined into a single input sequence for the transformer.

[CLS] "Best practices for recycling lithium-ion batteries" [SEP]

"Lithium-ion batteries should be processed with thermal pre-treatment to reduce hazards." [SEP]Step 2: Transformer Encoding (Joint)

The model processes this sequence, allowing cross-attention between query and document tokens.

- The query word “recycling” can directly attend to document words like “processed” and “reduce hazards”.

- The model learns fine-grained relationships.

Step 3: Relevance Scoring

The final [CLS] token embedding is passed through a classification or regression head to produce a relevance score (e.g., 0.0–1.0).

Following diagram depicts the above steps:

5️⃣ Why Use Cross-Encoders?

✅ Precision → Capture subtle differences like negations, comparisons, cause-effect.

✅ Contextual Matching → Understand domain-specific queries and rare terminology.

✅ Human-Like Judgment → Often align better with human rankings than other methods.

⚠️ Trade-Off: Expensive. They require joint inference per query–document pair, making them unsuitable for very large-scale retrieval directly.

6️⃣ Cross-Encoders in a Retrieval Pipeline

Since cross-encoders are slow, they are typically used as re-rankers:

- Candidate Retrieval (fast)

- Use BM25 or a bi-encoder to retrieve top-k candidates.

- Re-Ranking (precise)

- Apply a cross-encoder only to those candidates.

- Final Results

- Highly relevant docs surface at the top.

7️⃣ Python Examples: Scoring with a Cross-Encoder

7.1 Scoring with a Cross-Encoder

pip install sentence-transformersfrom sentence_transformers import CrossEncoder

# Load a pre-trained cross-encoder model

model = CrossEncoder('cross-encoder/ms-marco-MiniLM-L-6-v2')

# Query and documents

query = "Best practices for recycling lithium-ion batteries"

documents = [

"Lithium-ion batteries should be processed with thermal pre-treatment to reduce hazards.",

"Wind turbines generate clean energy in coastal regions.",

"Battery recycling reduces environmental footprint significantly."

]

# Create pairs

pairs = [(query, doc) for doc in documents]

# Predict relevance scores

scores = model.predict(pairs)

for doc, score in zip(documents, scores):

print(f"Score: {score:.4f} | {doc}")Score: 0.4742 | Lithium-ion batteries should be processed with thermal pre-treatment to reduce hazards.

Score: -11.2687 | Wind turbines generate clean energy in coastal regions.

Score: -0.7598 | Battery recycling reduces environmental footprint significantly.👉 Output shows recycling-related docs with higher scores than irrelevant ones.

7.2 Cross-Encoder as Re-Ranker

pip install rank-bm25 sentence-transformersfrom rank_bm25 import BM25Okapi

from sentence_transformers import CrossEncoder

# Candidate documents

corpus = [

"Wind turbines increase electricity generation capacity in coastal regions.",

"Battery recycling reduces lifecycle carbon footprint of EVs.",

"Hydrogen electrolyzers are becoming more efficient in Japan.",

]

# Step 1: BM25 Retrieval

tokenized_corpus = [doc.split(" ") for doc in corpus]

bm25 = BM25Okapi(tokenized_corpus)

query = "Efficiency of hydrogen electrolyzers"

bm25_scores = bm25.get_scores(query.split(" "))

# Select top candidates

top_docs = [corpus[i] for i in bm25_scores.argsort()[-2:][::-1]]

# Step 2: Cross-Encoder Re-ranking

model = CrossEncoder('cross-encoder/ms-marco-MiniLM-L-6-v2')

pairs = [(query, doc) for doc in top_docs]

rerank_scores = model.predict(pairs)

print("nFinal Ranked Results:")

for doc, score in sorted(zip(top_docs, rerank_scores), key=lambda x: x[1], reverse=True):

print(f"{score:.4f} | {doc}")Final Ranked Results:

5.5779 | Hydrogen electrolyzers are becoming more efficient in Japan.

-11.3173 | Battery recycling reduces lifecycle carbon footprint of EVs.Here, BM25 gives a rough shortlist, and the cross-encoder ensures true relevance comes first.

8️⃣ Real-World Applications

- Search Engines → Re-ranking documents for more precise results

- Legal & Policy Research → Matching queries to exact statutes/clauses

- Healthcare AI → Ranking medical literature for clinical questions

- Customer Support → Matching troubleshooting queries to correct FAQ entries

- E-commerce → Ranking products based on nuanced query matches

9️⃣ Strengths vs. Limitations

| Feature | Cross-Encoder | Bi-Encoder | BM25 |

|---|---|---|---|

| Precision | ✅ High | Medium | Low-Medium |

| Speed (Large Corpus) | ❌ Slow | ✅ Fast | ✅ Very Fast |

| Scalability | ❌ Limited | ✅ High | ✅ Very High |

| Contextual Understanding | ✅ Strong | Medium | ❌ Weak |

| Best Use Case | Re-Ranking | Retrieval | Candidate Retrieval |

🔟Bi-Encoder vs Cross-Encoder Architecture

Figure: Bi-Encoder vs Cross-Encoder

💡Conclusion

Cross-encoders are the precision workhorses of modern information retrieval.

They are not designed to scale across millions of documents alone, but as re-rankers, they deliver results that feel much closer to human judgment.

If you’re building any system where accuracy is critical — from search engines to knowledge assistants — a cross-encoder should be part of your stack.