Data privacy is one of the biggest challenges in deploying AI systems. From healthcare to finance, sensitive datasets are often required to train or run machine learning models — but sharing raw data with cloud providers or third-party services can lead to regulatory, security, and trust issues.

What if we could train and run models directly on encrypted data?

That’s the promise of Homomorphic Encryption (HE) — a cryptographic technique that allows computations on ciphertexts without ever decrypting them.

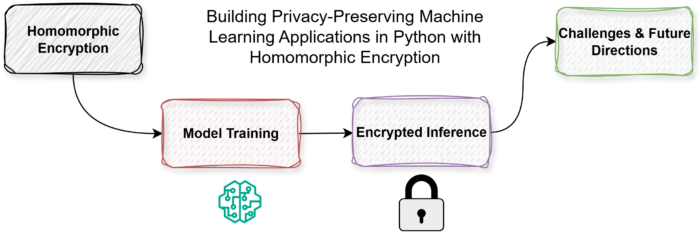

In this blog, we’ll build a series of demo applications in Python that showcase how homomorphic encryption can power privacy-preserving machine learning:

-

🔑 Introduction to homomorphic encryption

-

🧮 Linear regression on encrypted data

-

🌐 FastAPI-based encrypted inference service

-

✅ Logistic regression classification with encrypted medical data

-

🚀 Limitations, challenges, and the road ahead

1. What is Homomorphic Encryption?

Traditional encryption protects data at rest (storage) and in transit (network), but not during computation. Once data is processed, it must be decrypted — exposing it to whoever is running the computation.

Homomorphic encryption changes this paradigm. It enables computation on encrypted values such that when decrypted, the result matches the computation as if it were done on plaintext.

For example:

-

Client encrypts 5 and 7

-

Server computes (enc_5 + enc_7)

-

Client decrypts → gets 12

The server never saw the numbers 5 or 7, but still produced a meaningful result.

This opens the door for privacy-preserving AI services where cloud providers can run models on user data without ever seeing the raw inputs.

2. Python Libraries for Homomorphic Encryption

Several libraries bring HE to Python developers:

-

Pyfhel → general-purpose HE (wrapper around Microsoft SEAL)

-

TenSEAL → optimized for machine learning, supports encrypted vectors & tensors

-

HElib → C++ library with Python bindings

For our demos, we’ll use TenSEAL, which is designed for encrypted machine learning use cases.

Install it:

pip install tenseal3. Demo: Linear Regression with Encrypted Data

Let’s start with a toy regression task: predict house price from house size using encrypted training data.

Step 1: Setup TenSEAL Context

import tenseal as tsimport numpy as npdef create_context(): context = ts.context( ts.SCHEME_TYPE.CKKS, poly_modulus_degree=8192, coeff_mod_bit_sizes=[60, 40, 40, 60] ) context.global_scale = 2**40 context.generate_galois_keys() return contextThis function creates a CKKS homomorphic encryption context with polynomial modulus degree 8192, precision scale 2^40, and Galois keys enabled. This context is the foundation for performing encrypted computations (like addition, multiplication, or rotations) on encrypted real numbers.

Step 2: Sample Training Data

X = np.array([1, 2, 3, 4, 5], dtype=float)y = np.array([15, 30, 45, 60, 75], dtype=float) # price = 15 * sizeIt’s creating a toy dataset where the price is directly proportional to the size, with a multiplier of 15.

Step 3: Encrypt Data

context = create_context()enc_X = [ts.ckks_vector(context, [val]) for val in X]enc_y = [ts.ckks_vector(context, [val]) for val in y]This snippet takes the plaintext training data (X and y) and converts each number into an encrypted vector using CKKS. After this step, you can do computations (like addition, multiplication, scaling) directly on the encrypted data without ever decrypting it.

Step 4: Training (Simplified Gradient Descent)

For demo purposes, we decrypt inside gradient computation — but in a real HE setup, all computations could remain encrypted.

def train_linear_regression(enc_X, enc_y, lr=0.1, epochs=20): w, b = 0.0, 0.0 n = len(enc_X) for epoch in range(epochs): grad_w, grad_b = 0, 0 for xi, yi in zip(enc_X, enc_y): y_pred = xi * w + b error = y_pred - yi grad_w += (xi * error).decrypt()[0] grad_b += error.decrypt()[0] grad_w /= n grad_b /= n w -= lr * grad_w b -= lr * grad_b print(f"Epoch {epoch+1}: w={w:.4f}, b={b:.4f}") return w, bThe code trains a simple linear regression model using gradient descent. It starts with weight and bias set to zero, then for each epoch it computes predictions, calculates the error, and derives gradients with respect to the weight and bias. These gradients are averaged, then used to update the parameters by stepping in the opposite direction of the gradient. Although the inputs are encrypted, the gradients are decrypted during computation (for demo purposes). Finally, the function prints progress each epoch and returns the learned weight and bias.

Step 5: Train and Predict

w, b = train_linear_regression(enc_X, enc_y)print(f"Final model: price = {w:.2f} * size + {b:.2f}")enc_input = ts.ckks_vector(context, [6.0])enc_pred = enc_input * w + bprint("Prediction for size=6:", enc_pred.decrypt()[0])The code trains the model, prints the learned equation, and demonstrates making a prediction on new encrypted data.

Output:

(env) root@81eb33810340:/workspace/he-ml# python lin-reg-enc-data.pyEpoch 1: w=16.5000, b=4.5000Epoch 2: w=13.5000, b=3.6000Epoch 3: w=14.0700, b=3.6900Epoch 4: w=13.9860, b=3.6000Epoch 5: w=14.0214, b=3.5442Epoch 6: w=14.0346, b=3.4834Epoch 7: w=14.0515, b=3.4246Epoch 8: w=14.0675, b=3.3667Epoch 9: w=14.0832, b=3.3098Epoch 10: w=14.0987, b=3.2539Epoch 11: w=14.1140, b=3.1989Epoch 12: w=14.1289, b=3.1448Epoch 13: w=14.1437, b=3.0916Epoch 14: w=14.1581, b=3.0394Epoch 15: w=14.1724, b=2.9880Epoch 16: w=14.1864, b=2.9375Epoch 17: w=14.2001, b=2.8878Epoch 18: w=14.2136, b=2.8390Epoch 19: w=14.2269, b=2.7910Epoch 20: w=14.2400, b=2.7438Final model: price = 14.24 * size + 2.74Prediction for size=6: 88.1838602661561✅ We successfully trained & inferred on encrypted data.

4. Challenges and Limitations

While homomorphic encryption (HE) makes it possible to run machine learning on encrypted data, there are several practical challenges that must be understood before deploying such systems at scale:

4.1 Performance Overhead

-

Problem: HE computations are significantly slower compared to traditional machine learning on plaintext data.

-

For example, a single encrypted addition or multiplication can take milliseconds, while the same operation on plaintext takes microseconds or less.

-

Complex models that involve thousands or millions of operations (like deep neural networks) can become prohibitively slow.

-

-

Why it happens: Encryption schemes like CKKS or BFV encode values as large polynomials. Each multiplication or addition involves expensive polynomial arithmetic, number-theoretic transforms (NTT), and modulus switching.

-

Impact: HE is currently more suitable for smaller models (linear regression, logistic regression, decision trees) than large-scale deep learning, unless heavily optimized.

-

Performance → HE computations are slower than plaintext ML.

-

Ciphertext size → Encrypted data is much larger than plaintext.

-

Limited operations → Non-linear functions (sigmoid, softmax) must be approximated.

-

Training → Training fully on encrypted data is possible but heavy; many systems use federated learning + HE for practicality.

4.2 Ciphertext Size & Memory Consumption

-

Problem: Encrypted data (ciphertexts) are much larger than plaintext data.

- For example, a single encrypted floating-point number might take a few kilobytes, whereas the raw value is just 8 bytes.

-

Why it happens: HE ciphertexts must include redundancy and structure (e.g., modulus, polynomial coefficients) to allow encrypted computations.

-

Impact:

-

Storing large datasets in encrypted form can require 10–100× more space.

-

Network communication between client and server becomes bandwidth-heavy.

-

Memory usage on the server can be a bottleneck if too many encrypted vectors are processed simultaneously.

-

4.3 Limited Supported Operations

-

Problem: Homomorphic encryption schemes support only a restricted set of operations efficiently.

-

Linear operations (addition, multiplication) are natural.

-

Non-linear functions like sigmoid, tanh, softmax, ReLU are not directly supported.

-

-

Workaround: Use polynomial approximations of non-linear functions.

-

Example: Replace the logistic sigmoid with a simple polynomial

-

These approximations work well in limited ranges but reduce accuracy.

-

-

Impact:

-

High-accuracy deep learning models cannot be fully ported to HE without approximation losses.

-

Research is ongoing into better polynomial or piecewise approximations that preserve accuracy while being HE-friendly.

-

4.4 Training on Encrypted Data

-

Problem: Training machine learning models entirely on encrypted data is computationally very expensive.

-

Gradient descent requires repeated multiplications, non-linear activations, and updates across many iterations.

-

Even a small logistic regression trained under HE can take hours or days.

-

-

Practical Approach:

-

Federated Learning + HE:

-

Clients keep data locally.

-

They compute model updates (gradients) on plaintext but encrypt them before sending to a central server.

-

The server aggregates encrypted updates (without seeing individual contributions) and updates the global model.

-

-

This hybrid approach combines efficiency with privacy, making it more realistic than fully HE-based training.

-

-

Impact: End-to-end encrypted training is still an active research area, with most production-ready solutions focusing on encrypted inference or encrypted aggregation of updates.

Homomorphic encryption is a breakthrough for privacy-preserving machine learning, but it comes with trade-offs: slower computations, larger ciphertexts, limited function support, and impracticality for large-scale training. For now, HE is most effective in encrypted inference and in combination with federated learning for training.

5. Future Directions

Homomorphic encryption for machine learning is still in its early stages, but the pace of research and applied innovation is accelerating. The next few years will likely bring advancements that address today’s limitations and open new possibilities for privacy-preserving AI. Here are some promising directions:

5.1 Federated Learning with Homomorphic Encryption

-

What it is:

-

In federated learning, multiple clients (e.g., hospitals, banks, mobile devices) train a shared model collaboratively without centralizing raw data.

-

Each client computes local updates (gradients or weights) and sends them to a central server for aggregation.

-

With HE, these updates can be encrypted before transmission. The server aggregates encrypted updates and sends back an improved global model — all without ever seeing the clients’ raw data or gradients.

-

-

Why it matters:

-

Protects sensitive datasets such as medical records, financial transactions, or user behavior logs.

-

Prevents the server or malicious insiders from inferring private information from model updates.

-

Enables cross-organization collaboration — e.g., pharmaceutical companies jointly training models on encrypted clinical trial data.

-

-

Challenges ahead:

-

Scaling to millions of clients while keeping training efficient.

-

Handling non-IID data (when different clients’ data distributions differ significantly).

-

Balancing HE’s computational overhead with the real-time needs of federated learning.

-

5.2 Encrypted Deep Learning

-

The vision: Run full-scale deep learning models like Convolutional Neural Networks (CNNs) for image classification or Transformers for natural language processing directly on encrypted inputs.

-

Progress so far:

-

Research prototypes have shown CNNs running on encrypted images for tasks like digit recognition (MNIST) or medical imaging.

-

Transformers under HE are being studied for privacy-preserving NLP, where users can query encrypted documents without revealing their text.

-

-

Why it’s hard:

-

Deep models rely heavily on non-linear functions (ReLU, softmax, attention mechanisms), which HE does not natively support.

-

Even polynomial approximations for these functions become unstable as model depth increases.

-

The ciphertext growth and computational cost scale rapidly with network complexity.

-

-

The future:

-

Research into HE-friendly neural architectures — custom-designed layers that avoid costly operations.

-

Use of bootstrapping optimizations (refreshing ciphertexts) to enable deeper computations.

-

Hybrid models where only the most privacy-sensitive layers are run under HE, while less critical parts run in plaintext.

-

5.3 Hybrid Privacy Technologies

Homomorphic encryption is powerful, but it isn’t a silver bullet. The most promising direction is combining HE with other privacy-preserving technologies to build robust, end-to-end secure ML systems:

-

HE + Differential Privacy (DP):

-

HE ensures the data remains encrypted during computation.

-

DP adds statistical noise to outputs or gradients to prevent leakage about individual records.

-

Together, they provide both cryptographic security and formal privacy guarantees.

-

-

HE + Secure Multi-Party Computation (SMPC):

-

SMPC splits data across multiple parties who jointly compute without revealing their shares.

-

HE can accelerate or simplify SMPC protocols by reducing communication rounds.

-

This hybrid approach is useful for high-stakes collaborations (e.g., banks jointly detecting fraud without revealing customer data).

-

-

HE + Trusted Execution Environments (TEE):

-

TEE (like Intel SGX) provides hardware-based secure enclaves.

-

HE can complement TEEs by reducing the trust required in hardware vendors — even if an enclave is compromised, the data remains encrypted.

-

5.4 Looking Ahead

The long-term vision is fully private AI pipelines, where:

-

Data is encrypted at collection.

-

Training happens across multiple entities without any party seeing the raw data.

-

Inference is run on encrypted queries, producing encrypted outputs.

-

Clients alone decrypt results, ensuring data confidentiality, model confidentiality, and output confidentiality.

If today’s limitations are addressed, such pipelines could transform industries like:

-

Healthcare: AI diagnosis on encrypted medical scans without hospitals sharing raw images.

-

Finance: Fraud detection on encrypted transaction streams.

-

Government & Defense: Secure intelligence sharing across agencies.

-

Consumer Tech: Voice assistants or chatbots that process encrypted user inputs without “listening in.”

6. Conclusion

The future of homomorphic encryption in machine learning is not about HE alone, but about ecosystems of privacy technologies — federated learning for collaboration, HE for encrypted computation, DP for statistical privacy, SMPC for secure multi-party workflows, and TEEs for hardware-level isolation. Together, these will bring us closer to a world where AI can learn from everyone, without exposing anyone.

Related Articles

Ai_Ml

Deep Learning

- Provenance in AI: Auto-Capturing Provenance with MLflow and W3C PROV-O in PyTorch Pipelines – Part 4

Medical Imaging

- Provenance in AI: Building a Provenance Graph with Neo4j – Part 3

- Provenance in AI: Tracking AI Lineage with Signed Provenance Logs in Python - Part 2

Follow for more technical deep dives on AI/ML systems, production engineering, and building real-world applications: