Practical Guide for AI Engineers with Supporting Tools

Artificial Intelligence (AI) is no longer a research curiosity—it powers critical systems in healthcare, finance, transportation, and defense. But as AI adoption grows, so do the risks: bias, security vulnerabilities, lack of transparency, and unintended consequences.

To help organizations manage these challenges, the U.S. National Institute of Standards and Technology (NIST) introduced the AI Risk Management Framework (AI RMF 1.0) in January 2023.

For AI engineers, this framework is more than high-level governance—it can be operationalized with existing open-source libraries, MLOps pipelines, and monitoring tools. Let’s break it down.

What is the NIST AI RMF?

The AI RMF is a voluntary, flexible, and sector-agnostic framework designed to help organizations manage risks across the AI lifecycle.

Its ultimate goal is to foster trustworthy AI systems by emphasizing principles like fairness, robustness, explainability, privacy, and accountability.

Think of it as the AI-equivalent of DevSecOps best practices—a structured way to integrate risk thinking into design, development, deployment, and monitoring. Instead of retrofitting ethical or legal concerns at the end, engineers can bake them directly into code, pipelines, and testing.

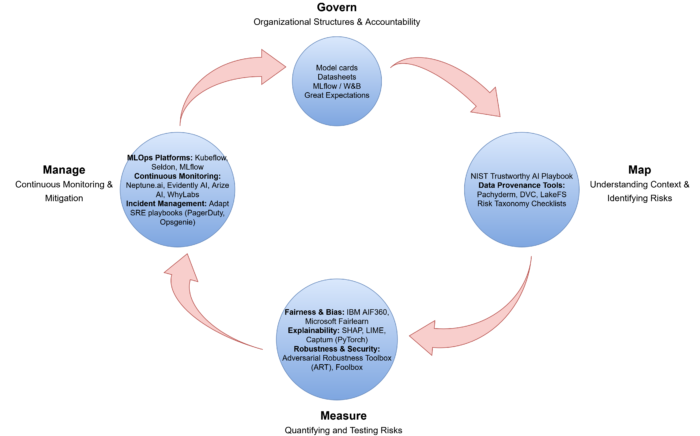

The Core Pillars of AI RMF and Supporting Tools

NIST organizes the framework around four core functions, known as the AI RMF Core. For engineers, these map nicely onto the ML lifecycle.

1. Govern – Organizational Structures & Accountability

What it means:

Governance is about who owns risk and how it is tracked. Without clear accountability, even the best fairness metrics or privacy protections won’t scale. This function ensures leadership commitment, defined responsibilities, and enforceable processes.

How engineers can implement it:

-

Standardize documentation for datasets and models.

-

Track lineage and provenance of data and experiments.

-

Build reproducible ML pipelines so decisions can be audited later.

Supporting Tools:

-

Model Cards (Google) → lightweight docs describing model purpose, limitations, and ethical considerations.

-

Datasheets for Datasets (MIT/Google) → dataset documentation to capture origin, bias, and quality.

-

Weights & Biases / MLflow → experiment tracking, versioning, and governance metadata.

-

Great Expectations → data quality validation built into ETL/ML pipelines.

2. Map – Understanding Context & Identifying Risks

What it means:

Before writing a line of model code, engineers need to understand context, stakeholders, and risks. Mapping ensures the AI system is aligned with real-world use cases and surfaces risks early.

How engineers can implement it:

-

Identify who the AI system impacts (users, communities, regulators).

-

Document the intended use vs. possible misuse.

-

Anticipate risks (bias, adversarial threats, performance in edge cases).

Supporting Tools:

-

NIST Trustworthy AI Playbook → companion guide with risk templates and examples.

-

Data Provenance Tools:

-

Pachyderm → versioned data pipelines.

-

DVC → Git-like data and model versioning.

-

LakeFS → Git-style object store for ML data.

-

-

Risk Taxonomy Checklists → resources from Partnership on AI and OECD for structured risk mapping.

3. Measure – Quantifying and Testing Risks

What it means:

Mapping risks isn’t enough—you need to quantify them with metrics. This includes fairness, robustness, explainability, privacy leakage, and resilience to adversarial attacks.

How engineers can implement it:

-

Integrate fairness and bias checks into CI/CD pipelines.

-

Run explainability tests to ensure interpretability across stakeholders.

-

Stress-test robustness with adversarial attacks and edge cases.

Supporting Tools:

-

Fairness & Bias:

-

IBM AIF360 → 70+ fairness metrics and mitigation strategies.

-

Microsoft Fairlearn → fairness dashboards and post-processing.

-

-

Explainability:

-

SHAP, LIME, Captum (PyTorch) → feature attribution and local/global explainability.

-

Evidently AI → interpretability reports integrated with drift monitoring.

-

-

Robustness & Security:

-

Adversarial Robustness Toolbox (ART) → adversarial testing, poisoning, and defense.

-

Foolbox → adversarial attack generation for benchmarking model resilience.

-

4. Manage – Continuous Monitoring & Mitigation

What it means:

AI risks don’t stop at deployment—they evolve as data shifts, adversaries adapt, and systems scale. Managing risk means establishing feedback loops, monitoring dashboards, and incident response plans.

How engineers can implement it:

-

Treat models as living systems that require continuous health checks.

-

Monitor for data drift, bias drift, and performance decay.

-

Set up incident management protocols for when AI fails.

Supporting Tools:

-

MLOps Platforms: Kubeflow, Seldon, MLflow for deployment and lifecycle tracking.

-

Continuous Monitoring:

-

Neptune.ai → experiment tracking with risk-aware metrics.

-

Evidently AI, Arize AI, WhyLabs → production-grade drift, bias, and observability dashboards.

-

-

Incident Management: Adapt SRE playbooks (PagerDuty, Opsgenie) for ML-specific failures like data poisoning or unexpected bias spikes.

Characteristics of Trustworthy AI (and Tools to Support Them)

The AI RMF identifies seven key characteristics of trustworthy AI. These are cross-cutting qualities every AI system should strive for:

-

Valid & Reliable → Testing frameworks (pytest, pytest-ml) + continuous evaluation.

-

Safe → Simulation environments (e.g., CARLA for self-driving AI).

-

Secure & Resilient → Adversarial robustness tools (ART, Foolbox).

-

Accountable & Transparent → Model Cards, version control (MLflow, DVC).

-

Explainable & Interpretable → SHAP, LIME, Captum.

-

Privacy-Enhanced → TensorFlow Privacy, PySyft, Opacus.

-

Fair with Harm Mitigation → Fairlearn, AIF360, Evidently AI bias dashboards.

For engineers, these aren’t abstract principles—they map directly to unit tests, pipelines, and monitoring dashboards you can implement today.

Why It Matters for Engineers

Traditionally, “risk” in engineering meant downtime or performance degradation. But in AI, risk is multi-dimensional:

-

A biased recommendation engine → unfair economic impact.

-

A misclassified medical image → patient safety risks.

-

An adversarial attack on a financial model → systemic security threat.

The AI RMF helps engineers broaden their definition of risk and integrate safeguards across the lifecycle.

By adopting the framework with supporting tools, engineers can:

-

Automate fairness, robustness, and privacy checks in CI/CD.

-

Log provenance for datasets and models.

-

Build dashboards for continuous risk monitoring.

-

Collaborate with legal and policy teams using standardized documentation.

Getting Started (Actionable Steps)

-

Integrate Provenance Tracking → Use DVC or Pachyderm in your ML pipeline.

-

Automate Fairness & Robustness Tests → Add Fairlearn and ART checks into CI/CD.

-

Adopt Transparency Practices → Publish Model Cards for all deployed models.

-

Monitor in Production → Deploy Evidently AI or WhyLabs for drift & bias detection.

-

Collaborate Cross-Functionally → Align engineering practices with governance and compliance teams.

Final Thoughts

The NIST AI RMF is not a compliance checklist—it’s a living guide to building trustworthy AI. For engineers, it bridges the gap between technical implementation and organizational responsibility.

By embedding Govern, Map, Measure, Manage into your workflow—and leveraging open-source tools like AIF360, Fairlearn, ART, MLflow, and Evidently AI—you don’t just ship models, you ship trustworthy models.

As regulation around AI tightens globally (EU AI Act, U.S. AI Executive Orders, ISO/IEC standards), frameworks like NIST’s AI RMF will help engineers stay ahead of the curve.

👉 Takeaway for AI Engineers: Use the NIST AI RMF as your north star, and operationalize it with today’s open-source and enterprise tools. Trustworthy AI isn’t just theory—it’s code, pipelines, and monitoring.

Related Articles

Explainable AI

- Provenance in AI: Auto-Capturing Provenance with MLflow and W3C PROV-O in PyTorch Pipelines – Part 4

- Provenance in AI: Building a Provenance Graph with Neo4j – Part 3

- Provenance in AI: Tracking AI Lineage with Signed Provenance Logs in Python - Part 2

- Provenance in AI: Why It Matters for AI Engineers - Part 1

Follow for more technical deep dives on AI/ML systems, production engineering, and building real-world applications: