Chapter 6: Building a ChatML Pipeline

Structuring Inputs, Outputs, and Role Logic in Code

This chapter translates ChatML’s philosophical foundations into concrete engineering practice.

It demonstrates how to build a ChatML pipeline — a sequence of code components that process strucured messages between roles (system, user, assistant, and tool).

You will learn how to encode, route, and interpret these messages programmatically, turning abstract markup into executable logic.

Practical examples show how a project-support bot can orchestrate multi-step reasoning, delegate tasks to specialized agents, and maintain reproducible context — all within a ChatML-compliant framework.

ChatML pipeline, production architecture, validation, prompt engineering workflow, LLM pipeline, message processing

6: Building a ChatML Pipeline

6.1 Introduction: From Philosophy to Pipeline

In the previous chapter, we explored ChatML’s design philosophy — structure, hierarchy, and reproducibility.

But how do we implement that philosophy in real systems?

The answer lies in the ChatML Pipeline.

A ChatML Pipeline converts structured conversation into computation.

It handles three essential responsibilities:

- Input Structuring – encoding messages into standardized ChatML form.

- Role Logic – routing messages according to conversational roles and system policies.

- Output Management – decoding and delivering model responses back into actionable or displayable formats.

For the Project Support Bot, this pipeline is the central nervous system: it receives user instructions (“Generate sprint summary”), interprets them through system policies, delegates computation to tool functions, and synthesizes responses as the assistant.

6.2 Anatomy of a ChatML Pipeline

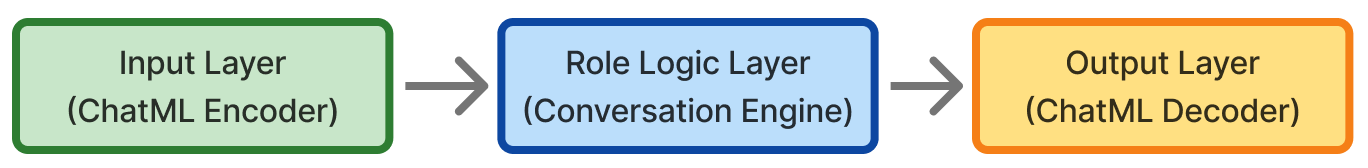

A ChatML Pipeline can be viewed as a three-layered architecture:

Each layer aligns with ChatML’s message philosophy.

| Layer | Responsibility | Example in Project Support Bot |

|---|---|---|

| Input Layer | Converts raw user input into structured ChatML message objects. | User types “Show sprint velocity.” |

| Role Logic Layer | Applies rules, policies, and reasoning steps. | Assistant queries Jira tool for data. |

| Output Layer | Packages response back into ChatML format and presents to UI. | Returns formatted summary report. |

This layered approach keeps communication consistent, testable, and reproducible.

6.3 The Input Layer – Structuring Messages

Message Schema

At its core, every message follows the ChatML schema:

{

"role": "user",

"content": "Generate project summary for Sprint 3"

}A pipeline ingests this schema as structured data, ensuring that semantic meaning is preserved and context is reproducible.

The Encoder

The encoder wraps messages into a ChatML-compliant sequence:

def encode_chatml(messages):

encoded = ""

for msg in messages:

encoded += f"<|im_start|>{msg['role']}\n{msg['content']}\n<|im_end|>\n"

return encodedEncoding has two key effects: - It establishes explicit roles (system, user, assistant, tool). - It creates a deterministic input format for the model.

Context Injection

The input layer also merges:

- System prompts (policy or identity)

- Memory context (previous turns)

- User query (current instruction)

Example for the project bot:

messages = [

{"role": "system", "content": "You are a project support assistant for

Agile teams."},

{"role": "user", "content": "List open issues for Sprint 3."}

]The encoder transforms this into reproducible ChatML context, ready for the model.

6.4 The Role Logic Layer – Orchestrating Behavior

Role Routing

ChatML roles are behavioral contracts.

Each role dictates who acts next and what scope of information they access.

| Role | Function | Example |

|---|---|---|

system |

Defines global context | “You are assisting with project tracking.” |

user |

Initiates request | “Generate velocity report.” |

assistant |

Performs reasoning | “Fetching sprint velocity metrics…” |

tool |

Executes function calls | Jira API, SQL query, or file lookup |

Execution Graph

The pipeline routes messages in a logical graph:

This loop ensures every request passes through reasoning and execution stages before being answered.

def route_message(role, content):

if role == "user":

return handle_user(content)

elif role == "assistant":

return handle_assistant(content)

elif role == "tool":

return handle_tool(content)The design follows single responsibility: each handler is deterministic and testable.

Policy Layer and Validation

Before a message reaches the model, the system enforces constraints:

- Word limits

- Role permissions

- Security and compliance filters

This ensures that the assistant cannot exceed its defined scope — a cornerstone of trustworthy AI.

6.5 Integrating Tools and Functions

The Tool Interface

In a modern ChatML Pipeline, the tool role acts as a function gateway.

Example schema:

{

"role": "tool",

"name": "fetch_jira_tickets",

"arguments": {"sprint": "Sprint 3"}

}The model outputs this message, and the pipeline interprets it as a deterministic function call:

if msg["role"] == "tool":

result = tools[msg["name"]](**msg["arguments"])Tool Responses

Tools respond with structured ChatML messages:

{

"role": "tool",

"content": "{\"tickets\": 18, \"open\": 3, \"closed\": 15}"

}These outputs feed back into the assistant’s reasoning stage, ensuring a clear cause-effect chain.

Safety and Isolation

Each tool executes in a sandboxed context, isolated from model logic, ensuring:

- Deterministic outputs

- Traceability

- Secure resource access

This isolation preserves reproducibility across sessions and environments.

6.6 The Output Layer – Decoding and Delivering Responses

The Decoder

Once the model produces output, the decoder reconstructs structured messages:

def decode_chatml(text):

messages = []

blocks = text.split("<|im_start|>")[1:]

for block in blocks:

role, content = block.split("\n", 1)

content = content.replace("<|im_end|>", "").strip()

messages.append({"role": role.strip(), "content": content})

return messagesThis reverses encoding, yielding machine-readable responses for storage or display.

Response Normalization

A good pipeline ensures uniformity:

- Trim whitespace

- Normalize Markdown and tables

- Remove duplicate system tags

For a project bot, normalization guarantees that sprint summaries look consistent regardless of context length.

Presentation Layer

Finally, the response is:

- Rendered to UI

- Logged for audit

- Stored for contextual memory

This is where structured dialogue becomes actionable knowledge.

6.7 Managing Context and Memory

Sliding Context Window

To prevent overload, the pipeline maintains a windowed context — retaining only recent and relevant messages.

MAX_CONTEXT = 10

messages = messages[-MAX_CONTEXT:]This ensures performance while keeping reasoning coherent.

Persistent Memory Store

Older conversations can be serialized into JSONL or vector embeddings:

{"role": "user", "content": "Plan sprint backlog"}

{"role": "assistant", "content": "Added 12 stories to Sprint 4 backlog."}The project bot retrieves summaries or historical facts from this store using similarity search.

Replay and Debugging

Because ChatML is replayable, the same transcript can be re-executed for:

- Regression testing

- Reproducibility audits

- Conversation debugging

6.8 Practical Example – End-to-End Project Support Bot Flow

Below is a simplified end-to-end example:

# Step 1: Encode input

messages = [

{"role": "system", "content": "You are a project assistant."},

{"role": "user", "content": "Generate sprint summary for Sprint 3."}

]

chatml = encode_chatml(messages)

# Step 2: Pass to LLM

llm_output = model.generate(chatml)

# Step 3: Decode model response

decoded = decode_chatml(llm_output)

# Step 4: Handle tool requests

for msg in decoded:

if msg["role"] == "tool":

result = execute_tool(msg)

messages.append({"role": "tool", "content": result})

# Step 5: Append assistant summary

messages.append({"role": "assistant", "content": "Sprint 3 completed with

velocity 42 points."})This pipeline ensures structure, traceability, and reproducibility across every execution.

6.9 Debugging and Observability

Observability transforms the pipeline from a black box into a transparent mechanism.

Logging and Tracing

Each message is logged with:

- Timestamp

- Role

- SHA-256 hash of content

This makes each conversation turn verifiable.

Visualization

Pipeline states can be visualized as a flowchart:

System → User → Assistant → Tool → Assistant → UserThis helps developers and stakeholders understand how the bot thinks.

6.10 Engineering for Reproducibility and Trust

The pipeline embodies ChatML’s philosophy through code discipline:

| Design Value | Implementation Mechanism |

|---|---|

| Structure | Strict message schema and encoder/decoder |

| Hierarchy | Role-based routing functions |

| Reproducibility | Replayable ChatML transcripts |

| Transparency | Structured logging and tracing |

| Modularity | Pluggable tool and memory layers |

By enforcing these patterns, we turn conversational intelligence into deterministic, inspectable computation.

6.11 Extending the Pipeline

Future enhancements may include:

- Streaming interfaces for real-time assistant responses

- Concurrent pipelines for multiple projects

- Versioned system prompts for governance

- Event-driven orchestration using message queues

Each addition can still conform to ChatML’s schema, preserving consistency while scaling functionality.

6.12 Summary

| Layer | Purpose | Key Mechanism |

|---|---|---|

| Input | Structure user/system messages | ChatML Encoder |

| Logic | Route and process roles | Role Router |

| Output | Format and deliver results | ChatML Decoder |

| Memory | Persist and recall context | JSONL + Vector Store |

6.13 Closing Thoughts

A ChatML Pipeline is more than a data-processing framework — it is a formal architecture for reasoning.

By embedding structure and hierarchy directly into code, we ensure that every conversation is traceable, reproducible, and trustworthy.

In the Project Support Bot, this pipeline transforms abstract dialogue into concrete outcomes: velocity reports, sprint retrospectives, and transparent audit trails.

As we move to the next chapter, we’ll explore how ChatML pipelines evolve into multi-agent ecosystems, where specialized assistants cooperate within the same structured communication fabric — the next frontier in agentic AI.